THU was an unforgettable experience. If you ever get the chance to go, I highly recommend it! I don’t have time right now to do a writeup of the full event, but I can tell you about one slice of it: my demo of Big Wet Pixels was a lot of fun!

One big concern I had in the weeks leading up to the event was the logistics. How could I show my system painting a live subject (ideally a volunteer) on stage in front of an audience? For the experience to work well, the setup would have to fit a lot of constraints: I’d have to be able to reach my laptop keyboard and see the screen. My camera would have to see the subject. The subject would have to see what’s happening on the screen, and also see me. And the audience would have to see the screen, the subject, and me. I wasn’t sure there was a solution to this problem in euclidean 3-space that didn’t violate any laws of physics.

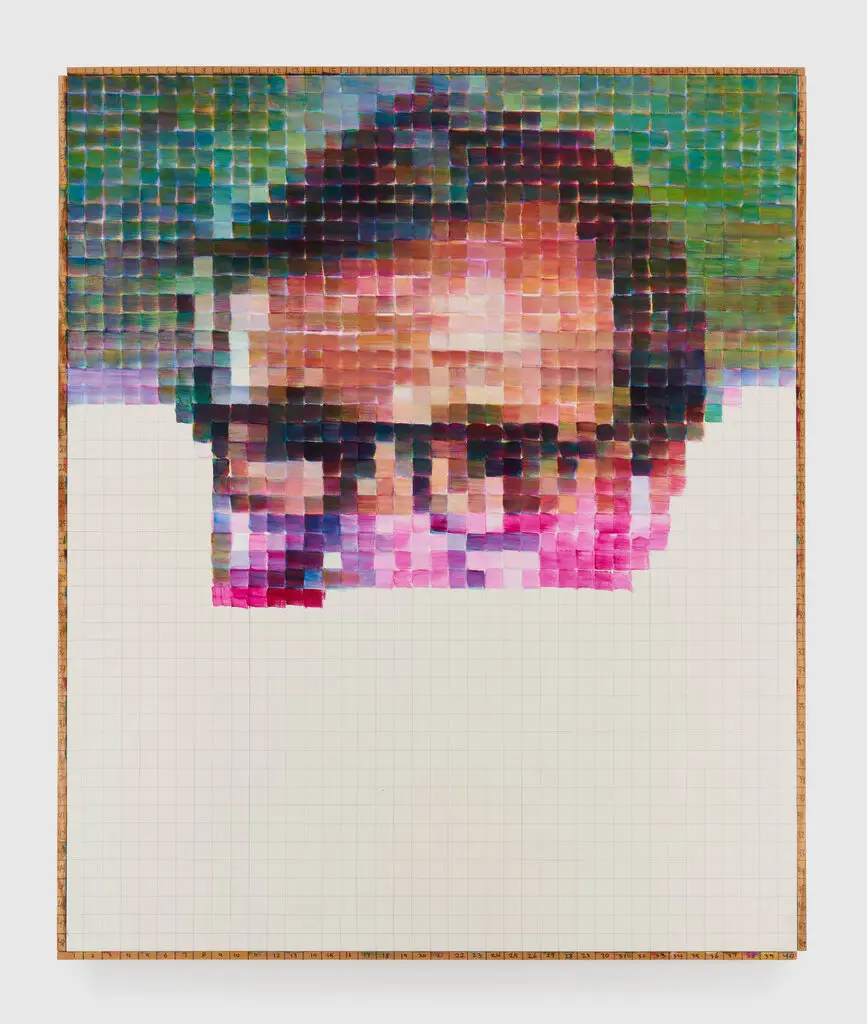

Then I got to visit the venue. And it looked like this:

A stage in the center, five rows of seats all around, and great big LED screens on all four sides of the room! It was perfect. Better than perfect. It’s immersive, intimate, and equitable: there are literally no bad seats in the house. And from the stage, you feel close to every single person in the audience. This was by far the most innovative setup I’ve ever seen for a conference venue.

It took a couple of tech check sessions to get everything working (note to self: 15 minutes is not enough time to test out such a complex setup!) and I had to make a few last-minute tweaks to my code to make sure it would fit the aspect ratio of the big screens, and add controls to adjust the framing of the subject on the fly (arrow keys are much quicker than trying to adjust a tripod head supporting a long heavy lens!) but when the time came for the actual demo, everything worked perfectly.

I didn’t do much advance planning for how I’d operate the system, I just improvised in response to what was happening in the moment. So we only ended up exploring a sliver of the vast parameter space. But the participants that came up on stage seemed to have a lot of fun with it, posing for the camera, making faces, and using their hands and other props.

The audience asked really interesting questions, and I encouraged them to give me suggestions for what to do with the controls. (In a nod to Zach Lieberman, I told them, “the DJ does take requests!”) Before I knew it, we were out of time, and I had to pack up my gear and leave this beautiful room for the next speaker.