I’m midway through a (major, long overdue) overhaul of the fluid transport layer of my watercolor simulation. Made some good progress over the weekend. But more importantly: I made lots of new bugs. Glorious, unrepentant, face-eating bugs. I almost don’t have the heart to squash them.

Category Archives: video

Big Wet Pixels 9

Continuing to explore the parameter space of this watercolor simulation. Enjoying the contrast between natural and artificial, accidental and planned. Really enjoying what’s starting to happen with color. Finding bugs, fixing bugs, creating new bugs. This is getting close to the point where I’d use it to paint someone’s portrait.

Big Wet Pixels 8

Refinement, and wandering, and more refinement. Taking control, and losing it.

Tonight’s effort was less about pigment, and more about paper and water.

Big Wet Pixels 7

Some refinements on the Chuck Close homage, and then a left turn into something very different. The bugs in my code are a source of unending joy and frustration. Someday, maybe, I’ll fix them, but where’s the fun in that?

Big Wet Pixels 6

New today: exploring making each pixel smarter, with more thoughtful brushstroke planning. Starting to get excited about the shapes and textures that emerge. (In case it’s not obvious, I’m reaching for a Chuck Close vibe here. But his work has all kinds of depth to it, I’m barely scratching the surface as of yet.) Also, I’ve added some new types of randomized color palettes, including interference pigments on dark paper. So many happy accidents. I don’t think I’ll ever get bored of this.

Big Wet Pixels 5

Big Wet Pixels 5 from Cassidy Curtis on Vimeo.

Bigger, wetter, more pixelated! Playing with different strategies for sub-pixel brushstroke planning. There are so many possibilities…Big Wet Pixels 3

Still exploring big wet pixels (originally inspired by the #genuary4 prompt) using my watercolor simulation in Unity. Now the pixels are actually pixels: given a random selection of pigments and paper, they try their best to match the color coming in through my webcam. Lovely glitches ensue.

To get this working, I had to go back and solve an old problem that’s bothered me for decades: given an arbitrary set of three pigments and paper, what combination of pigment densities will produce the closest match for any given RGB color? This is non-trivial, because the gamut described by three Kubelka-Munk pigments is non-linear, not necessarily convex, and might even not be an embedding! In our 1997 paper we addressed that problem in a really crude way, which I was never very happy with: quantize the pigment densities into bins, and find the nearest bin in RGB space using a 3d-tree search. So it gave me great satisfaction last weekend when I implemented a continuous solution, using gradient descent.

The curved RGB color gamut described by a trio of semi-opaque white, amber and green pigments on purple paper. The white sphere represents the RGB color we’d like to match. A smaller, colored sphere represents the closest approximation that can be produced within the color gamut. A thin, meandering line shows the path taken from the middle of the gamut via gradient descent.

Genuary 4: More Big Wet Pixels

Getting more aggressive with color and texture…

Genuary 4, 2024: Big Wet Pixels (and No Palettes)

It took me a minute to get going this year, mainly because my watercolor simulation code is a big pile of spaghetti that’s been artfully splattered across the walls and left to dry in place. I’ll have to do a bit of cleaning before I can have more serious fun with it. But I did at least manage to get my synthetic paper layer working, more or less. This video serves the prompts for both #genuary4 (Pixels) and #genuary2 (No Palettes). Happy #genuary to all who observe!

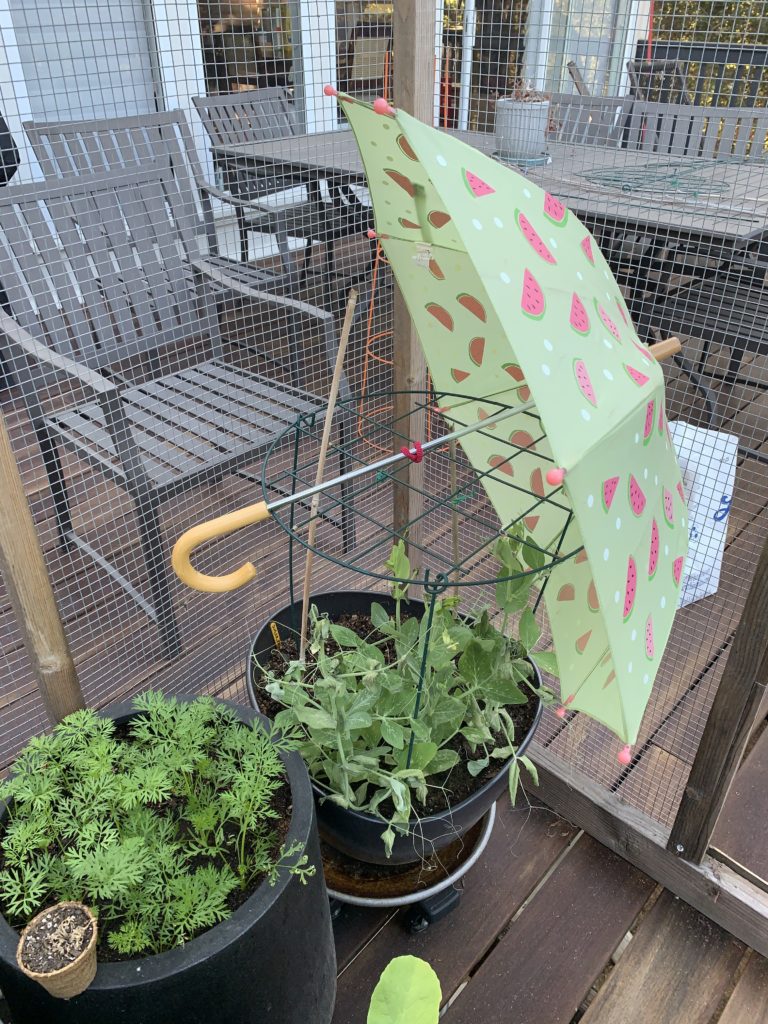

Garden Timelapse: Sugar Ann Peas

I’ve continued to shoot timelapse video of the various veggies we grow in the garden. Here’s one more from last fall, of some sugar snap peas. They grew so fast I had to shoot twice a day! I was blown away by the origami-like way each pair of leaves unfolds as the vines grow, and the branching tendrils that whip out and grab on to whatever they can find. You might also notice some sudden changes in early September: we had a brutal heat wave that almost killed the plants. Some quick thinking and an old kid-sized umbrella saved them from total destruction. Not all of the plants survived, but the ones that made it produced crunchy pods as sweet as candy. Will definitely grow these again!